Using artificial intelligence to create video games | Pocket Gamer.biz

Talk of artificial intelligence is all the rage right now as companies such as Google and Microsoft look for ways to incorporate AI tools into their current systems. However, AI can be used in many ways, across various industries.

One such industry is video games. We spoke with CEO and co-founder at ElectricNoir Studios, Nihal Tharoor about the team’s mobile games and how AI-generated content is helping them to define a new era of entertainment.

Tell us about yourself and how things got started at ElectricNoir?

Ben [Tatham, co-founder] and I met in advertising school nearly a decade ago, and have been working together ever since – first in ad agencies – and now as co-founders of ElectricNoir.

Around 2019, we started thinking about the content we see every day on our mobile phones – text messages, news articles, selfies, voice notes – and how these can also be creative devices for storytelling. Our phones play such a large role in our lives, and we realised there was a massive opportunity in developing dramatised entertainment that was native to mobile.

We decided to go for it and developed our first release in 2020, called Dead Man’s Phone, which is a crime drama surrounding suspicious deaths in London. As the lead detective, you use your phone to direct the investigation; interacting with other characters and accessing the victim’s phone and police records.

The first episode of Dead Man’s Phone was nominated for a BAFTA in 2020, and we launched it on our proprietary app, Scriptic, in 2022 – it’s since had over a million organic instals. Last year, Netflix made seasons 1 and 2 (renamed Scriptic: Crime Stories) available to its subscribers (trailer below).

Our work is a blend of games, film and TV, and the growth of our team over the last three-and-a-half years really reflects this. We have experience in our leadership team and wider team from across the spectrum of visual and interactive entertainment.

As well as our team, our catalogue of content has grown. In addition to Dead Man’s Phone, we have released multiple mini-series, including our AI-generated horror mini-series: Dark Mode (trailer below).

How did the idea of using AI to generate game content come about?

We’ve been using AI tools in our production processes since we began working on the full season of Dead Man’s Phone in 2021. Creating ‘phone-first content’ comes with its own unique challenges and production requirements; we first started experimenting with AI as a problem-solving tool. For example, the content has to look natural on a vertical phone screen and if we wanted to use footage that was horizontal, we could use AI to manipulate the image, instead of having to do another costly and time-consuming shoot.

We thought horror would be the perfect playground for experimenting with AI-generated visuals, text, and sound – leaning into, rather than shying away from, the uncanny valley effect

Nihal Tharoor

We also used AI tools to help our writers produce copy more quickly for formulaic content, like news articles that exist within a gameworld, or transactional dialogue between characters.

But while the time and cost efficiencies of AI have been really useful, we wanted to challenge ourselves to be more innovative with this technology in a creative context. There’s something quite uncanny about generative AI – as we progressed with the project, the team realised we could use this to our advantage. We thought horror would be the perfect playground for experimenting with AI-generated visuals, text, and sound – leaning into, rather than shying away from, the uncanny valley effect.

Which AI tools are the team using and what purpose do they serve?

For Dark Mode, the team tried a host of different generative AI tools, and we primarily used three: ChatGPT by OpenAI to create everything from realistic dialogue to story synopsis. DALL·E 2 also from OpenAI, to create photorealistic images from short text descriptions; and Murf.AI, a highly versatile AI-powered voice-sample generator, featuring dozens of different AI ‘actors’ capable of different accents, intonations and emotions to create voice notes and phone calls.

There’s such a vast array of AI tools available on the market that we’re continuing to experiment with what’s out there for creative innovation. We’ve recently been using Midjourney for generating images in our next title – a courtroom drama – and it’s been really exciting to see how effectively AI can be deployed in a realistic genre too.

Your latest game, Dark Mode is a horror game. How was the process of ensuring that AI understood the tone of the title?

From the outset, we wanted to use AI tools for creative collaboration, using the tech to push the limits of our imaginations and inspire us further to get to an even more horrifying end result.

The AI would come out with really unexpected things which pushed the team’s creativity, even influencing the direction of the storyline in the end

Nihal Tharoor

Our writers really went on a creative journey with the different AI tools – they were constantly workshopping the outputs from the AI and evolving the inputs they used to make sure the AI was producing content that was faithful to the tone we were trying to achieve.

It was also quite eye-opening and fascinating at times – the AI would come out with really unexpected things which pushed the team’s creativity, even influencing the direction of the storyline in the end.

For the first episode, our writers began with DALL-E 2, giving it a prompt to create a kindergarten. The outputs our writers got – of creepy paperwork insects and evil children – inspired them to keep going and expand their vision of the original idea, eventually generating an entire dream world of unique and nightmarish horrors.

Thanks to Murf.AI, we were able to create disturbing voice notes from the evil children that didn’t require any actors and could be rewritten over and over again to get the perfect creepy sample.

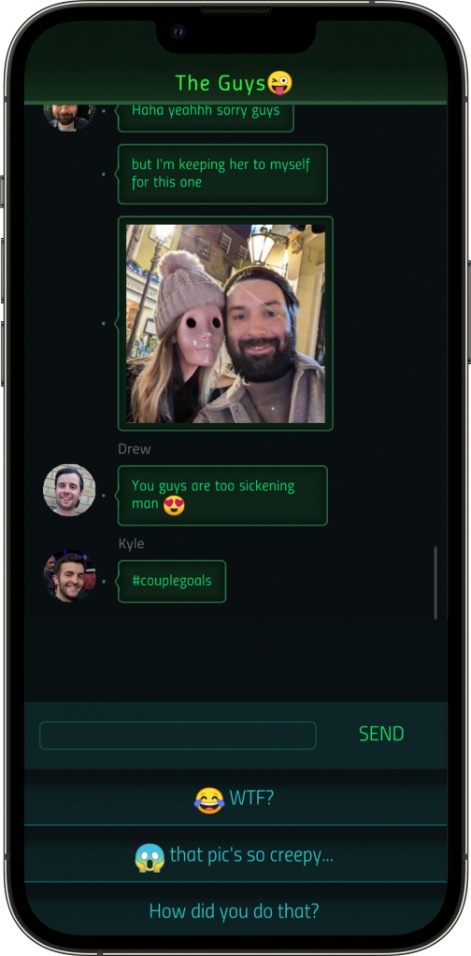

In the second episode, our writers worked with ChatGPT to develop texts from a supernatural imposter, creating a character that sounded defensive and uncannily almost human. We then used DALL-E 2’s powerful inpainting tool to create ‘before’ and ‘after’ photos of the main character’s friends, as they gradually turned inhuman themselves under the influence of the imposter.

Our writers generated a whole range of ‘accidentally-created’ horrors with DALL-E 2, which inspired them to develop the plot

Nihal Tharoor

During the course of making episodes 1 and 2, our writers generated a whole range of ‘accidentally-created’ horrors with DALL-E 2, which in turn inspired them to develop the plot of the third episode. All throughout the production process, our writers used the AI outputs as a jumping-off point for developing the story further, staying in control of the quality of the game and its tone.

How does AI improve the creative process behind creating these experiences?

We used ChatGPT and DALL-E 2 as collaborative partners in our creative process – they were almost additional members of our team, bringing their own ideas and supporting us in executing our vision.

We strongly believe that AI can be used fruitfully to advance human creativity – not replace it – and that when integrated into a production and creative process thoughtfully, you can achieve impressive results. While we were able to produce Dark Mode over a shorter timeframe and on a smaller budget, the end result is still compelling and sophisticated entertainment because the team was so mindful of quality.

Our writers worked alongside the AI every step of the way and were essential to ensuring the text, images, and sound samples we used fit their brief and inspired the horror effect they were after. Players seem to be pleased with the results so far – 94% of those who start a Dark Mode episode finish it, and 67% repeat an episode to get an alternate ending.

As an industry, we’re still in the early phases of using AI in our creative processes, but I’m confident that generative AI is an asset that can be used to unleash our imaginations.

Our writers generated a whole range of ‘accidentally-created’ horrors with DALL-E 2, which inspired them to develop the plot

Nihal Tharoor

Where do you see the future of generative AI heading and how could it further impact your projects?

As competition in the market continues to unfold, I expect we’ll see other powerful AI tools emerge that can inspire human ingenuity and help us develop even more innovative content and entertainment experiences. We’re excited to see how other creative minds leverage these technologies, what inspires them, and how they use them in their production processes.

At ElectricNoir, we see generative AI impacting our future projects in a host of ways. We’ll be continuing to use tools like ChatGPT and DALL-E in the production and creative processes of our own first-party content, and see use cases beyond the horror genre. We’re also looking to use these technologies as part of our wider UGC [User Generated Content] aims, to help empower our audiences to become creators themselves and build their own worlds, characters, and dramas.

What’s next for you and the team?

We’ll be sticking to our mission to create high-quality entertainment and interactive experiences which put our audience at the heart of the story.

As well as continuing to put out new first-party content, IP partnerships with third parties would be a natural next step for us, enabling audiences to dive into the worlds of their favourite books, films, or TV series, interact with beloved characters, and assume the main role in the story.

We’re also developing a UGC solution that would give audiences the ability to create their own cinematic universes and write their own stories. The platform would be no-code, so that you can be both the writer, director, and star of your own drama without needing any technical skill besides using your mobile phone.

And of course, we’ve been impressed with the results from Dark Mode, and so we definitely plan on using AI to generate interactive content in other genres in the future.

Originally written for Beyondgames.biz